Show EOL distros:

Package Summary

This package can be used to generate and manage poses for the direct mode of 3-D object search. The direct mode is used as an opening procedure for Active Scene Recognition. The poses will be generated so that they cover the current environment map. There are different modes to generate these poses. One is based on a grid and a second on a recording of the "cropbox record" mode in the asr_state_machine.

- Maintainer: Pascal Meißner <asr-ros AT lists.kit DOT edu>

- Author: Borella Jocelyn, Karrenbauer Oliver, Meißner Pascal

- License: BSD

- Source: git https://github.com/asr-ros/asr_direct_search_manager.git (branch: master)

Package Summary

This package can be used to generate and manage poses for the direct mode of 3-D object search. The direct mode is used as an opening procedure for Active Scene Recognition. The poses will be generated so that they cover the current environment map. There are different modes to generate these poses. One is based on a grid and a second on a recording of the "cropbox record" mode in the asr_state_machine.

- Maintainer: Pascal Meißner <asr-ros AT lists.kit DOT edu>

- Author: Borella Jocelyn, Karrenbauer Oliver, Meißner Pascal

- License: BSD

- Source: git https://github.com/asr-ros/asr_direct_search_manager.git (branch: master)

Contents

Description

The AsrDirectSearchManager is used to manage the views for the direct mode of Active Scene Recognition. The direct search is one of the implemented mode in the 3-D object search we interrelated with scene recognition. The main goal of the direct search is to generate views which should cover the search space, i.e., the robot's environment. It provides a possibility to search for objects without prior knowledge. The direct search can be started in the asr_state_machine with mode 1 or 3.

Functionality

There are diffrent algorithms implemented to generate the views for the direct search. The views depend on the current searching space. If the searching space changes the views have to be newly generated. The direct search is designed in a way, that the views will not be generated on the fly. Instead they will be precalculated. This saves time at the 3D-object-search.

Currently there are two different approches implemented to generate the views for the 3D-object-search. The aim for both is to cover the whole search space by minimising the number of views.

Grid Mode

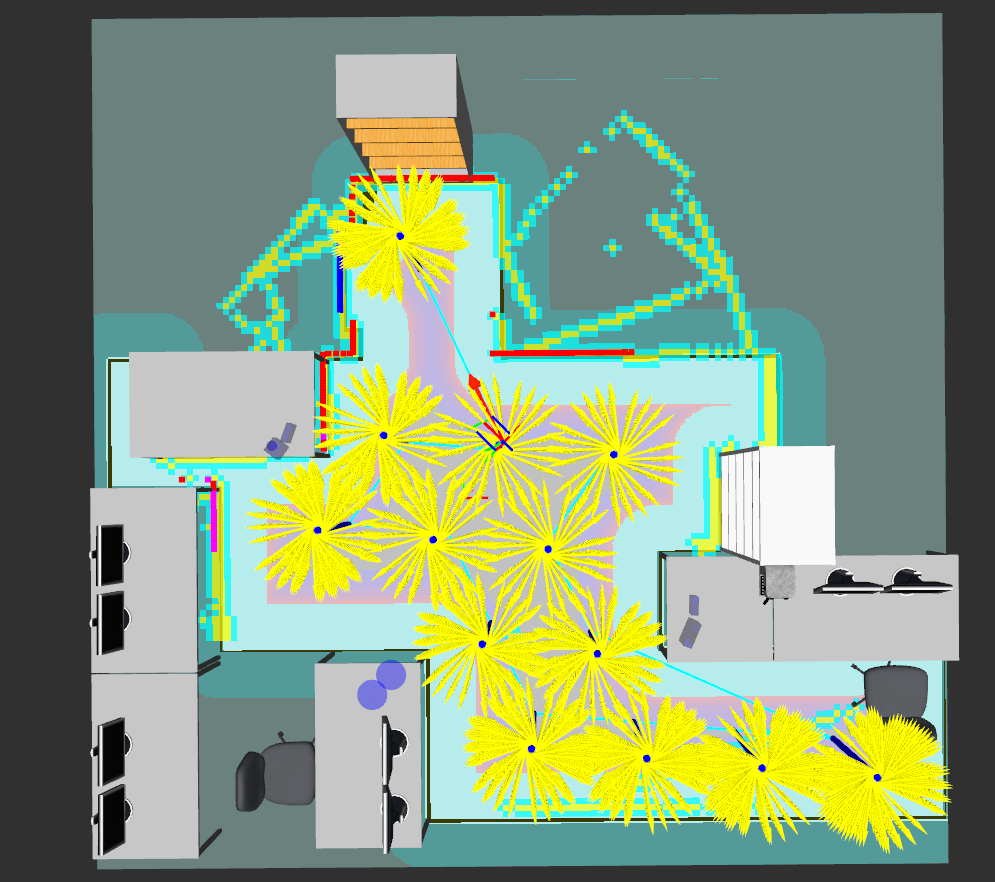

One implementation is the grid mode. The grid can be generated with the asr_grid_creator. The idea is to devide the search space with a grid. The grid is made up of grid points which have an equidistant distance between each. On each grid point the asr_direct_search_manager generates a subset of views which are neccessary to cover the room around that grid point. The number of views depens on the size of the frustum.

RecordMode

The second implementation to manage the views is the record mode. The basic idea of this mode differ much from the one with the grid. However, both share the same aim. One drawback of the grid mode is, that there are not much views covering the boundaries of the room. However, most of the time this places are the most interesting.

The views for this mode will be generated by the asr_state_machine with the cropbox_record_mode. The basic idea is not to generate the views directly. Instead there will be object hypothesis generated for which the views will be choosen. For more information check out the doku of the asr_state_machine.

The views generated by the cropbox_record_mode of the asr_state_machine are not optimale to use. There are different approches implemented to minimise the number of views and the cost to reach them. See in "4.3 Parameters" how to specify the optimization. One approch is to concate views together. There are many generated views for which the robot pose is nearly equale and only the PTU-config differes. For those views the robot pose will be merged to one with multiple PTU-configs. At the end the number of views didn't change, but the robot has to move less which is one of the main costs.

Prior knowledge

One drawback for both implemented modes is that each have a lot of views. Searching for objects without prior knowledge is very time consuming and not efficient. So there is a way implemented to use prior knowledge. Depending on the given information the views will be reordered. No new views will be generated. There are multiple ways how the prior knwoledge can be generated. In each way the prior knwoledge has to represent absolute poses of different objects. Their reachability, distribution and accumulations will influence the order.

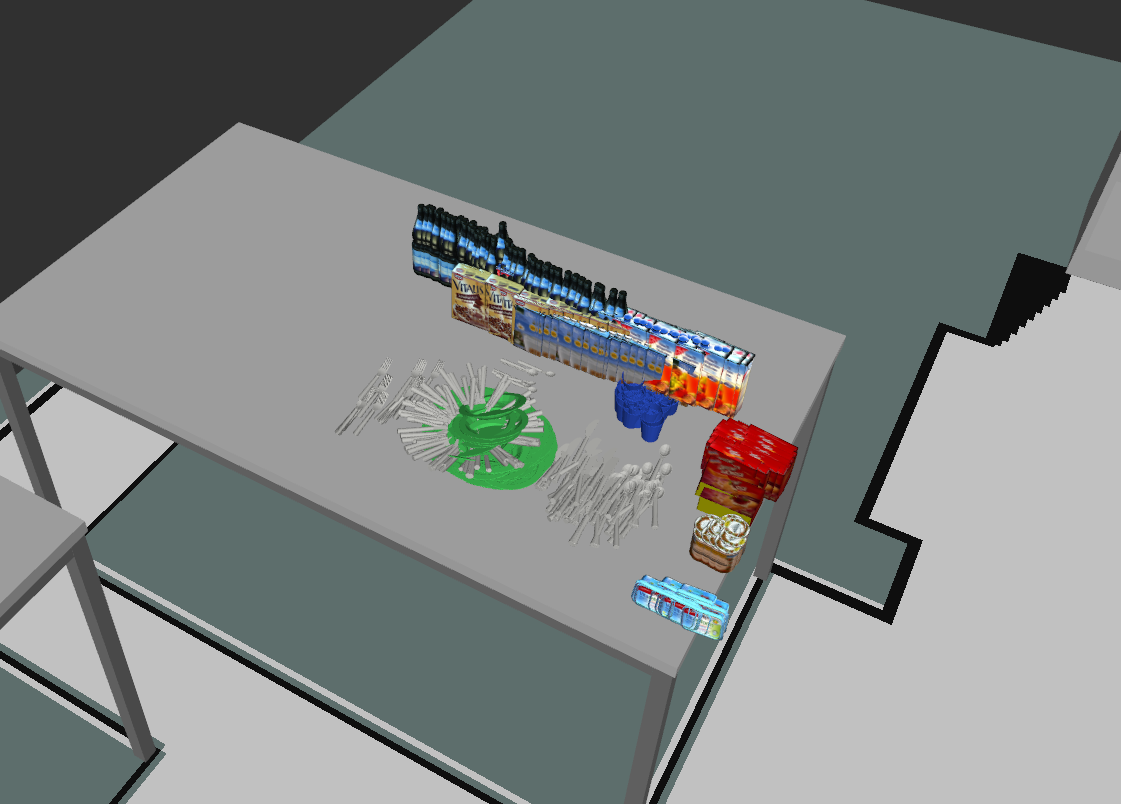

In the given system the absolute poses of the objects were a side product of the training of the scenes for indirect search. They represents the poses where the objects were observed by the robot. The picture below shows some poses without their normals of the recorded objects. There can be other more inteligent ways implemented to adapte the poses of the objects.

DirectSearchAction

The communication with this package is implemented with an action server. The following action can be used.

#goal definition command: the command which should be executed:

Reset: resets the manager to the initial state

BackToInitial: call this if there were other search steps between GetNextRobotState calls. The poses will be filtered depending on already visited viewports and reordered depending on the current position.

GetNextRobotState: gets the next robot state to take with additional information (see below)

searchedObjectTypesAndIds: the object_types_and_ids which are search at the moment

#result definition goalRobotPose: the next robot pose goalCameraPose: the next camera pose (belonging to the goalRobotPose) if present pan: the next pan to take tilt: the next tilt to take remainingPTUPoses: the PTU poses which are left for this goalRobotPose (just as information) remainingRobotPoses: the robot poses which are left (just as information) remainingPosesDistances: the remaining distance for the remainingRobotPoses to take (just as information) isSameRobotPoseAsBefore: if goalRobotPose is the same as the one from the call before isNoPoseLeft: if there are no poses left at all arePosesFromDemonstrationLeft: if there are poses left after this one, which are sorted based on the prior knwoledge filteredSearchedObjectTypesAndIds: the searchedObjectTypesAndIds which were filtered. It will be filtered, if at the goalCameraPose were already some objects searched or if it is more likely to find a subset of objects

Usage

GridMode:

- Generate Views (See

- With prior knowledge:

In asr_direct_search_manager/launch/direct_search_manager.launch

Adapt the path to the file of the generated/recorded views initializedGridFilePath

In asr_direct_search_manager/param/direct_search_manager_settings.yaml

Set directSearchMode to 1/grid_manager

Set reorderPosesByNBV to true

Without prior knowledge (like above with reorderPosesByNBV = false or like below):

In asr_direct_search_manager/launch/direct_search_manager.launch

Adapt the path to the file of the generated views gridFilePath

In asr_direct_search_manager/param/direct_search_manager_settings.yaml

Set directSearchMode to 3/grid_initialisation

Set reorderPosesByNBV to false

RecordMode:

- Generate Views (See Tutorials)

In asr_direct_search_manager/launch/direct_search_manager.launch

Adapt the path to the file of the recorded views recordFilePath

In asr_direct_search_manager/param/direct_search_manager_settings.yaml

Set directSearchMode to 2/recording_manager

Depending on use of prior knowledge: Set reorderPosesByNBV to false/true

For both:

Start system like described in "3.4. Start system" of asr_resources_for_active_scene_recognition

Needed packages

Needed software

Start system

This package is part of the active_scene_recognition. When using the asr_resources_for_active_scene_recognition, this node will be started automatically.

To use it standalone it can be started with

roslaunch asr_direct_search_manager direct_search_manager.launch

However, it needs most of the nodes of the active_scene_recognition to be run.

Unit tests can be run with

roscore

cd catkin_ws/build

make run_tests_asr_direct_search_manager

ROS Nodes

Subscribed Topics

/asr_flir_ptu_driver/state (sensor_msgs/JointState) of asr_flir_ptu_driver: needed to use the prior knowledge

Parameters

Launch file

asr_direct_search_manager/launch/direct_search_manager.launch

gridFilePath: the path to the config.xml generated by the asr_grid_creator, which contains robot poses of the grid initializedGridFilePath: the path to the initialized grid, which contains robot and camera poses of the grid. Can be generated by the grid init mode of the asr_state_machine

yaml file

asr_direct_search_manager/param/direct_search_manager_settings.yaml

fovH: camera fiels of view angles (will be used in grid_initialisation) fovV: camera fiels of view angles (will be used in grid_initialisation) clearVision: PTU angle free of vision obstacles (will be used in grid_initialisation)

directSearchMode: can be 1 for grid_manager, 2 for recording_manager and 3 for grid_initialisation

grid_manager: the poses will be read from the initializedGridFilePath. It is possible to use the reorderPosesByNBV

recording_manager: the poses will be read from the recordFilePath. it is possible to use the reorderPosesByNBV, reorderPosesByTSP, filterMinimumNumberOfDeletedNormals, filterIsPositionAllowed and concatApproxEqualsPoses

grid_initialisation: the poses will be calculated from the config and the fovH, fovV and clearVision. It is not possible to use the reorderPosesByNBV here, because only robot poses and no camera poses are known. Because of that, this mode will be used to initialize the grid for the grid_manager mode. See the tutorials for more information

distanceFunc: the distance function to use. 1 for the service call GetDistance of asr_robot_model_services (accurate, slow) or 2: the euclidean distance (approximative, fast)

reorderPosesByNBV: enables the use of prior knwolege by reordering the poses by asr_next_best_view, so that poses which have a higher chance to detect an object will be taken first. The reordering takes place on the basis of the recorded_object_poses from the current SQL-database reorderPosesByTSP: if the poses of the robot states should be reordered with TSP (nearest_neighbour and two_opt)

viewCenterPositionDistanceThreshold: the threshold when two positions of viewcenter poses will be seen as approx equale. This will be used to filter poses depending on already seen viewports taken from other search modes (indirect search) The threshold for orientation will be /nbv/mHypothesisUpdaterAngleThreshold

filterMinimumNumberOfDeletedNormals: remove all robot states which have not at least this number of normals deleted while the poses were recorded filterIsPositionAllowed: remove all robot states which the robot can not reach (this was need due a bug in asr_next_best_view). It could also be neccessary if the colThresh (asr_next_best_viewparam) has changed since the recording of the poses

concatApproxEqualsPoses: concatenate robot poses which are approx equale to one with multiple PTU-configs. As a result the robot will move less.

concatRobotPosePositionDistanceThreshold: the threshold when two positions of robot poses will be seen as approx equale for concatenating two robot poses

Needed Services

asr_robot_model_services : IsPositionAllowed, GetDistance, GetRobotPose, GetCameraPose

asr_next_best_view: SetInitRobotState, SetAttributedPointCloud, RateViewports

asr_world_model: GetViewportList

Tutorial

Generating the views for the gridMode: AsrDirectSearchManagerTutorialGrid

Generating the views for the recordMode: AsrDirectSearchManagerTutorialRecord

To execute the direct search: AsrStateMachineDirectSearchTutorial