Contents

Introduction

ROS has grown at an amazing rate over the recent years in terms of content and contributors. With over 7.3M lines of code ROS has beaten many other massive open source projects (e.g. chromium with only 5.8M lines of code). However, only 27% of the code in ROS is released yet. There is a big portion (73%) of code in ROS we don't know much about.

Why should we care about code quality?

1. Avoiding Catastrophic Failures: Bad code evokes bad failures. In 1996 a European Ariane 5 rocket veered off it's path and self-destructed caused by a faulty software exception routine resulting from a bad 64-bit floating point to 16-bit integer conversion.

2. Maintenance and developing time: Robot software is amazingly complex. The debugging and testing consumes a major portion of the development time. Therefore it is necessary to keep the code well organized and structured to reduce potential errors.

3. ROS for commercial applications: In history some code standards have been developed by industry and institutions. For example the ISO C/C++, MISRA C/C++ or Safety Integrity Level. To use ROS in commercial products certain standards have to accomplished.

What is code quality?

Quality itself is actually quite difficult to define. To get an understanding of code quality it is convenient to distinguish between external and internal code quality.

External Quality

External quality is more about the goal of the software, that the software does what it is supposed to to. In addition to that it should run with acceptable performance and also have a good understandable interface etc.

- The program does what it is supposed to do

- with acceptable performance and

with acceptable user interface experience (Look & Feel)

Internal Quality

The internal quality of software deals more with the source code itself, it's structure, organisation etc. You can distinguish between different attributes of source code, e.g. Readability, Complexity and Testability. Too many projects end up with code that is so complex and unclear that it is virtually impossible to maintain. Even a small change can break an unrelated feature. Adding a small feature can take forever.

- Readability: only easily readable code can easily be understood and therefore changed

- Complexity: Simple code is always easier to maintain than complicated code

- Testability: only automatically tested code can be changed without fear of breaking existing behaviour

Code Metrics

Metrics are used by software industry to quantify the development operation and maintenance of software. They give us knowledge of the status of an attribute of the software and help us to evaluate it in an objective way. We will focus on Code Metrics that measure the internal quality of ROS code only.

What is a code metric?

- A software quality metric is a function whose inputs are software data and whose output is a single numerical value that can be interpreted as the degree to which software possesses a given attribute that affects its quality. -

Source: IEEE Standard 1061

Which metrics does the analysis in ROS contain?

The Software Engineering community has not agreed on a set of metrics universally accepted by the field. Therefore, many people have come up with different ways to measure different attributes of software. However, there are a few metrics that have prevailed. The analysis in ROS includes these and more. The metrics are classified into file-, function-, and class-metrics.

File-Based |

Function-Based |

Class-Based |

Comment to code ratio |

Cyclomatic complexity |

Coupling between objects |

|

Number of executable lines |

Number of immediate children |

|

Number of function calls |

Weighted Methods per class |

|

Maximum nesting of control |

Deepest level if inheritance |

|

Estimated static path count |

Number of methods available in class |

How to read the histograms?

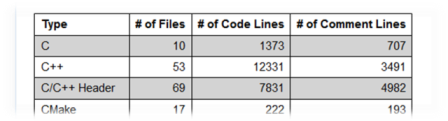

The Code Quality subsection starts always with information about the code quantity of the stack/package. The table is seperated into different filetypes. It shows the number of files, the lines of code and the lines of comments in relation to the sum of one filetype.

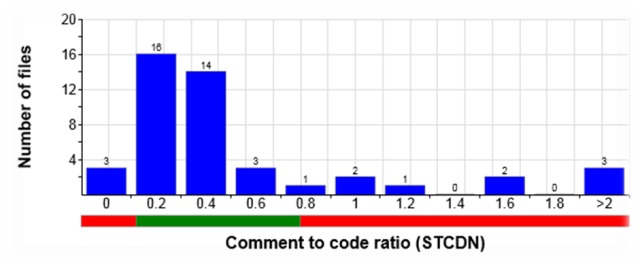

Each histogram represents the analysis results of one metric. The metrics are classified into File-, Function- and Class-Based Metrics. The y-axis represents always either the number of files, funtions or classes. The x-axis presents the metric value.

The bar-chart under the histogram indicates the recommended values. Only the files, functions or classes that are above the green bar have passed the test.

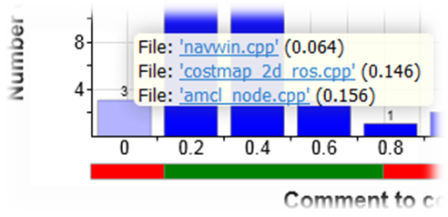

If you want to know which files, functions or classes are outside the recommended threshold, you can make the specific files, functions or classes appear by clicking on the blue bar. A list of the files, functions and classes will appear showing the specific metric value in brackets. If you click on the name you will be automatically forwarded to the file that is uploded in the propper repository.

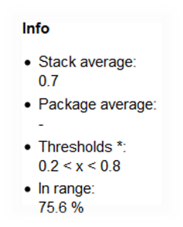

On the right-hand-side from the chart a list provides some meta-information. The stack- and package average is computed as the standart average and gives you a rough overview. The stack average is also shown on packages websites, so you can compare the package results against the stack average. In addition to the threshold the "In range" value shows how many percent of the files, functions or classes are in range with the threshold.

Thresholds

The recommended threshold's for code metric's vary from one company to another one. Therefore, within the following tables some different set's of threshold's were gained and categorized into the requirement levels High and Low.

This is a list of the companies/institutions containing the links to the source:

Homepage Threshold's: NASA SATC

Homepage Threshold's: HIS: Hersteller Initiative Software - Manufacturer's software initiative

Homepage Threshold's: KTH: Royal Institute of Technology, Sweden

Homepage Threshold's: University of Akureyri in Iceland

The ROS recommended values were derived from the thresholds from the institutions listed above. As a first step, the values with low requirements were choosen. You should use this values more like guidlines until the ROS members make an agreement on official threshold's for ROS.

File based

|

|

Comment to code ratio |

||

|

|

STCDN |

||

Requirements |

Recommended |

MIN |

MAX |

|

High |

NASA |

0.2 |

0.3 |

|

High |

HIS |

0.2 |

- |

|

Low |

University of Akureyri |

0.2 |

0.4 |

|

|

ROS |

0.2 |

- |

|

Function based

|

|

Cyclomatic complexity |

Number of executable lines |

Number of function calls |

Maximum nesting of control structures |

Estimated static path count |

||||||

|

|

STCYC |

STXLN |

STSUB |

STMIF |

STPTH |

||||||

Requirements |

Recommended |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

|

High |

NASA |

1 |

10 |

1 |

50 |

- |

- |

- |

- |

- |

- |

|

High |

HIS |

1 |

10 |

1 |

50 |

1 |

7 |

- |

4 |

1 |

80 |

|

Low |

KTH |

1 |

15 |

1 |

70 |

1 |

10 |

- |

5 |

1 |

250 |

|

|

ROS |

1 |

15 |

1 |

70 |

1 |

10 |

- |

5 |

1 |

250 |

|

Class based

|

|

Coupling between objects |

Number of immediate children |

Weighted methods per class |

Deepest level of inheritance |

Number of methods available |

||||||

|

|

STCBO |

STNOC |

STWMC |

STDIT |

STMTH |

||||||

Requirements |

Recommended |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

MIN |

MAX |

|

High |

NASA |

- |

5 |

- |

- |

- |

100 |

- |

5 |

1 |

20 |

|

Low |

University of Akureyri |

- |

- |

- |

10 |

1 |

50 |

- |

5 |

- |

- |

|

|

ROS |

0 |

5 |

0 |

10 |

1 |

100 |

- |

5 |

1 |

20 |

|

Installation

To set up the analysis process on your own system please follow the instructions on this tutorial: InstallationTutorial