ROS for Human-Robot Interaction

ROS for Human-Robot Interaction (or ROS4HRI) is an umbrella for all the ROS packages, conventions and tools that help developing interactive robots with ROS.

Contents

ROS4HRI conventions

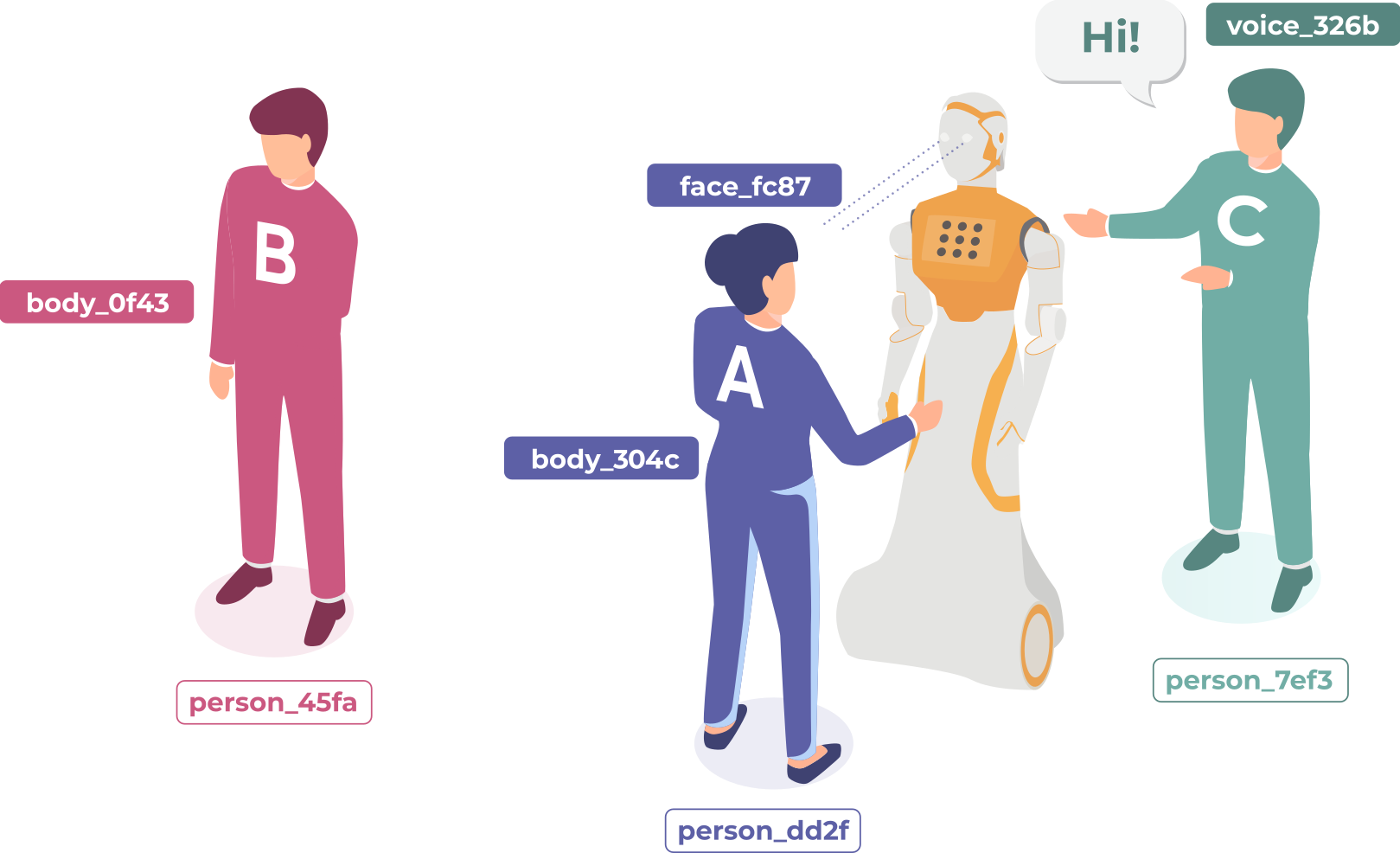

The ROS REP-155 (aka, ROS4HRI) defines a set of topics, naming conventions, frames that are important for HRI application. It was originally introduced in the paper 'ROS for Human-Robot Interaction', presented at IROS2021.

The REP-155 is still evolving. On-going changes can be submitted and discussed on the ros-infrastructure/rep Github repository.

Common ROS packages

hri_msgs (available in noetic and humble): base ROS messages for Human-Robot Interaction perception

hri_actions_msgs (available in noetic and humble): base ROS messages for Human-Robot Interaction actions (including human intents representation)

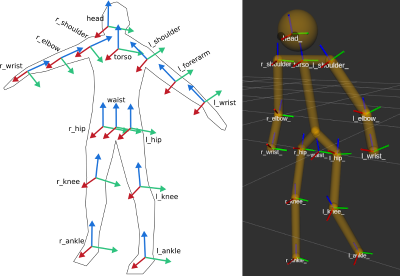

human_description (available in noetic and humble): a parametric kinematic model of a human, in URDF format

libhri (available in noetic and humble): a C++ library to easily access human-related topics

pyhri (available in noetic and humble): a Python library to easily access human-related topics

hri_rviz (available in noetic, source supports ROS 2): a collection of RViz plugins to visualise faces, facial landmarks, 3D kinematic models...

rqt_human_radar (ROS 1, ROS 2): rqt plugin, top-down view of the social surroundings of the robot.

The source code for these packages (as well as several others) can be found on github.com/ros4hri.

Specialized ROS packages

Feel free to add your own packages to this list, as long as they implement the REP-155.

Face detection, recognition, analysis

hri_face_detect (ROS1 & ROS2): a Google MediaPipe-based multi-people face detector.

- Supports:

- facial landmarks

- 3D head pose estimation

- 30+ FPS on CPU only

Body tracking, gesture recognition

hri_body_detect (ROS2): a Google MediaPipe-based 3D full-body pose estimator

- Supports:

- 2D and 3D pose estimation of a multiple people

- optionally, can use registered depth information to improve 3D pose estimation

hri_fullbody (ROS1 & ROS2): a Google MediaPipe-based 3D full-body pose estimator

- Supports:

- 2D and 3D pose estimation of a single person (multiple person pose estimation possible with an external body detector)

- facial landmarks

- optionally, can use registered depth information to improve 3D pose estimation

Voice processing, speech, dialogue understanding

Whole person analysis

hri_person_manager (ROS1 & ROS2): probabilistic fusion of faces, bodies, voices into unified persons.

Group interactions, gaze behaviour

hri_engagement (ROS1 & ROS2): real-time engagement assessment, based on visual metrics (gaze direction, proximity).

Tutorials

You can access the ROS4HRI tutorials here.

Even more tutorials on the RO4HRI Github page: https://ros4hri.github.io/ros4hri-tutorials/