Only released in EOL distros:

Package Summary

The aim of this stack is to grasp an office chair safely. To reach this aim we must consider the recognition of the chair and a resulting chair grasp motion. For a better on-use we split up the project-task into three subparts. So each part describe a significant section of the whole task. We have a part for the recognition, the estimation of the grasp positions and the generation of the agitation.

- Author: Maintained by Jan Metzger

- License:

- Source: svn http://alufr-ros-pkg.googlecode.com/svn/trunk/student_projects/chair_grasping

Project Statement

Today, the mobile robotic faces a number of problems. Things which are trivial for us as humans are very difficult for the robot. These involve the localisation in the environment via the recognition of objects and the concrete manipulation of the objects. |

Our Approach

In our project we realised a chair grasping motion. |

Documentation

Prerequirements

- a PR2 robot

- a kinect on the head of the PR2

- a well lit room

- a office chair

- a model of the chair

- enough room around the PR2

Overview

In this stack we generate a graspmotion for grasping an object (chair). |

Cases

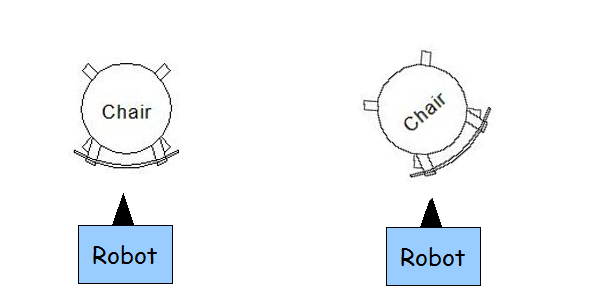

In order to guarantee a safety motion we must distinguish the orientation of the chair. For simplification we only consider two different cases of the orientation of the chair. |

chair_recognition

In the chair_recognition package we will realize if there is an object regarding to our model, in front of the robot. If there is one, it would be published. |

estimate_grasp_position

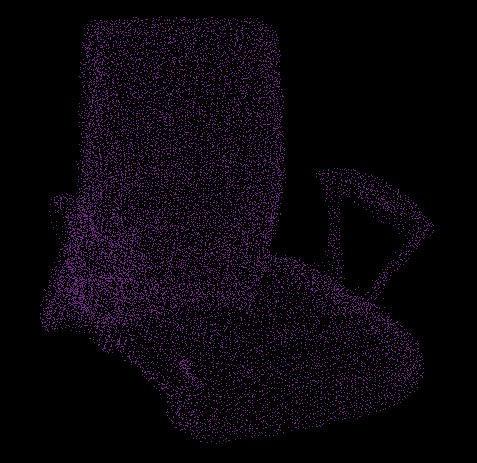

Here we receive the published object, reduce it and consider only a scrape of the backrest of the chair. For reducing the chairmodel to the scrape we use the PassThrough filter. |

grasp_motion

With the grasppositions we generate a trajectory for the arms of the robot. The difference between the pregrasp-position and the grasp-position is only a variation of the y-coordinates. |

How does it work

The model we used

Installation

The following steps need to be done on the robot. |

rosmake chair_grasping

Start Setting

First of all we have to arrange an office chair, or a chair which is equivalent with the model infront of the robot in that way, that the back of the chair is parallel to the y-axis of the robot with respect to the /torso_lift_link. (simplest case). |

Launching some basic controller and set basic settings

Basic Controller

Execute the following commands on the PR2: |

roslaunch pr2_teleop_general pr2_teleop_general_joystick_bodyhead_only.launch roslaunch openni_camera openni_node.launch

For executing the following command you have to install the simple_robot_control package. |

roslaunch simple_robot_control simple_robot_control_without_collision_checking.launch

If you interested at a visualization you also start rviz on the basestation. Which clouds should be visualized see below... |

Basic Settings

With the PR2-controller we raise the robots backbone so that his shoulder is higher than the chair. This is not really necessary but if you do so, the simple_robot_control actionserver would get a better kinematic result. |

Object Recognition

Execute the following command in the chair_recognition directory on the PR2 for launching the object recognition |

roslaunch chair_recognition.launch

You have to guarantee that the model of the chair exists. To do so look at the launch-file and check the following line: |

<param name="object_name" value="$(find chair_recognition)/office_chair_model.pcd" />

For the value specify your absolute file path of the model. |

Estimation of the Gripper Points

For estimation of the Gripper Points execute the following command on the PR2 in the estimation_grasp_positions directory. |

rosrun subscriber_test

On the commandline you get some output data. If the chair isn't correctly recognized there will be no output. |

Generate the motion

With the following command in the movement directory you will generate the motion of the robot. Execute it on the PR2. |

roslaunch movement.launch

Calling the rosservice

For executing the motion we call the following rosservices: |

rosservice call /grasp_chair -> grasping the chair rosservice call /release_chair -> going back to the start-pose

The service /grasp_chair runs the program only ones. If it will be needful to correct the orientation of the chair take a recall of the service until the robot grasp the chair correctly. |

rosservice call /grasp_chair || rosservice call /grasp_chair

Which clouds should be visualized in rviz

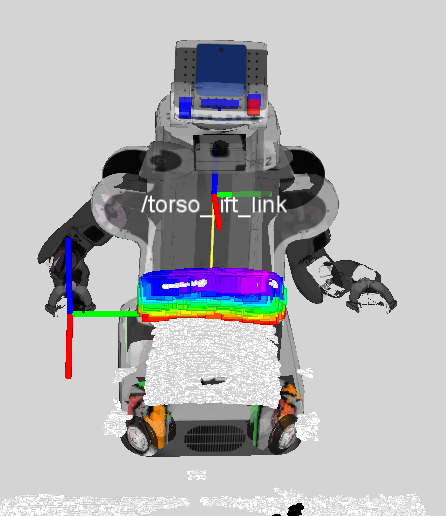

For best monitoring you should visualize the following clouds in rviz: |

- office_scene

GripperPunkte_single (only the grasp-points)

If you visualize all the clouds above you should get some similar to the screenshot. |

The colored surface of the chair is the cloud which is generated by the passthrough-filter. |

Video

Watch a video of the PR2 grasping a chair on youtube.

Limitation

We talked above about the simplest case, the back of the chair stands parallel to the robot. |

Outlook

The problem with the correction is that it is only a kind of unflexible try because there are no knowledge about the pivot of the chair. |

Report a Bug

<<TracLink(REPO COMPONENT)>>