Only released in EOL distros:

Package Summary

Time delay compensated single and multi sensor fusion framework based on an EKF

- Author: Maintained by Stephan Weiss, Markus Achtelik

- License: BSD

- Source: git https://github.com/ethz-asl/ethzasl_sensor_fusion.git (branch: None)

Package Summary

Time delay compensated single and multi sensor fusion framework based on an EKF

- Maintainer: Maintained by Stephan Weiss <none AT none DOT com>, Markus Achtelik <none AT none DOT com>

- Author: Maintained by Stephan Weiss, Markus Achtelik

- License: BSD

- Bug / feature tracker: http://github.com/ethz-asl/ethzasl_sensor_fusion/issues

- Source: git https://github.com/ethz-asl/ethzasl_sensor_fusion.git (branch: None)

Contents

Disclaimer

This site and the code provided here are under active development. Even though we try to only release working high quality code, this version might still contain some issues. Please use it with caution.

Overview

This Stack contains a sensor fusion framework based on an Extended Kalman Filter (EKF) for vehicle pose estimation including intra- and inter-sensor calibration. It assumes full 6DoF motion of the vehicle and an IMU centred platform. It is a self-calibrating approach rendering a vehicle a true power-on-and-go system. More precisely it estimates the

- vehicle 6DoF pose and velocity which can be used in an underlying pose controller

- IMU biases such as acceleration and gyroscope bias (intra-sensor calibration)

- sensor transformation between an additional sensor (e.g. camera, GPS) and the IMU (inter-sensor calibration)

- pose scaling in the case of a scaled pose measurement sensor (e.g. camera)

- roll and pitch drift of a non-global pose measurement sensor (e.g. camera)

The framework is particularly designed to work on an Micro Aerial Vehicle (MAV) carrying an IMU and one single camera performing visual odometry as only navigation sensors (see publications below and ethzasl_ptam). It has the following properties:

The pose and velocity estimates yield sufficiently clean values for pose control the MAV with this filter output. If used together with the asctec_mav_framework and ethzasl_ptam stacks reliable pose estimates at 1kHz are possible.

- The intra- and inter-sensor calibration estimates make it possible to deploy this algorithm quickly on any vehicle without tedious calibration procedures. Thus, this framework renders your platform power-on-and-go in real environments.

- The scaling and drift estimates ensure long term operability despite potential drift of an additional sensor such as the visial odometry when using a monocular camera. Note that only the roll and pitch drift of the visual odometry are observable, however, these are the most crucial states to keep an MAV airborne as it ensures the alignment to gravity.

This package contains the single sensor fusion code to fuse one sensor with an IMU. Our extension to fuse multiple sensors with an IMU is documented here and available here. We recommend that you read in particular this tutorial if you plan to fuse multiple sensors with an IMU.

Relevant publications are:

Stephan Weiss. Vision Based Navigation for Micro Helicopters PhD Thesis, 2012 pdf

Stephan Weiss, Markus W. Achtelik, Margarita Chli and Roland Siegwart. Versatile Distributed Pose Estimation and Sensor Self-Calibration for Autonomous MAVs. in IEEE International Conference on Robotics and Automation (ICRA), 2012. pdf

Stephan Weiss, Davide Scaramuzza and Roland Siegwart, Monocular-SLAM–based navigation for autonomous micro helicopters in GPS-denied environments, Journal of Field Robotics (JFR), Vol. 28, No. 6, 2011, 854-874. pdf

Stephan Weiss and Roland Siegwart. Real-Time Metric State Estimation for Modular Vision-Inertial Systems. in IEEE International Conference on Robotics and Automation (ICRA), 2011. pdf

Simon Lynen, Markus Achtelik, Stephan Weiss, Margarita Chli and Roland Siegwart, A Robust and Modular Multi-Sensor Fusion Approach Applied to MAV Navigation. in Proc. of the IEEE/RSJ Conference on Intelligent Robots and Systems (IROS), 2013. pdf

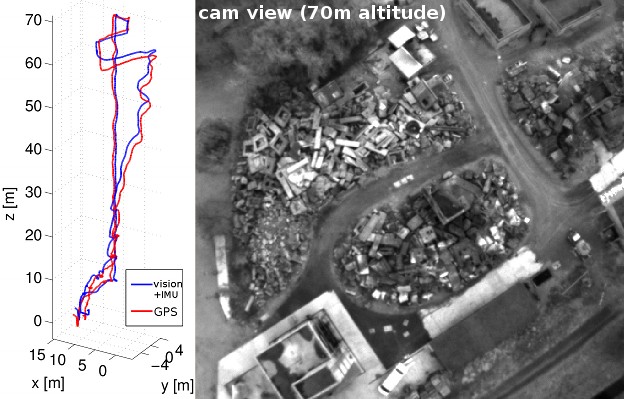

Vision based MAV navigation in not so small environments: We use ethzasl_ptam and ethzasl_sensor_fusion for vision based navigation for computationally constrained MAVs in large environments:

Top image: vision based height test up to 70m above ground and landing in the same mission

Bottom image: long-term vision based navigation for 360m (one battery life-time) with about 0.4% position drift (Bing Maps)

Packages

ssf packages are for single sensor fusion msf packages are for multi sensor fusion

ssf_core: core package containing the EKF propagation steps using IMU inputs and the shell for the update step

ssf_updates: contains update sensor modules with the update equations that are fed to the core

Installation

The following commands will fetch and compile the ethzasl_sensor_fusion stack.

# Fetch ethzasl_sensor_fusion stack git clone git://github.com/ethz-asl/ethzasl_sensor_fusion.git ethzasl_sensor_fusion # Update ROS_PACKAGE_PATH (if necessary) export ROS_PACKAGE_PATH=$ROS_PACKAGE_PATH:`pwd`/ethzasl_sensor_fusion # build rosmake ethzasl_sensor_fusion

Change Log

August 21st 2012

- Added link to PhD Thesis Weiss2012

June 27th 2012

created new package sensor_fusion_comm, where all necessary messages should now be placed in. The messages in ssf_core are for backwards compatibility of logfiles only.

- added parameters for initial values of the camera to IMU calibration in pose_sensor

- added parameter to switch between fixed measurement covariance and covarinace from the sensor

added parameter to # select if sensor measures its position w.r.t. world (e.g. Vicon) or the position of the world coordinate system w.r.t. the sensor (e.g. ethzasl_ptam)

- some internal cleanup, added documentation

May 9th 2012

- starting to release this stack...

Report a Bug

<<TracLink(REPO COMPONENT)>>